Relational Data Source

info

Parameters needed for source connection for SQL Server, Oracle, PostgreSQL and Damon database are similar, and SQL Server is used as an example.

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Auto creation of metadata collection task | Whether to generate a metadata collection task automatically in X-DAM. It is enabled and the collection is set to 1:00 by default. |

| Connection type |

|

Interface Data Source

- WebService

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Address | WebService interface address. Click Parse after entering the address, and then X-ETL automatically starts parsing the address. |

| Http authorization | Enable HTTP authorization and enter username and password if the interface needs authentication. |

| Method | Select corresponding methods based on the address parsing results. |

| Parse path | Data path in response data XML file. For example: Body/OutputParameters/P_OUT_CONTENT/HEADERS/HEADER. |

| Input mode | Displays corresponding input parameters based on the selected mode.

|

| Output mode | Displays corresponding output parameters based on the selected mode.

|

- Restful

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Address | RESTful interface address. |

| Http method | Select request methods. |

| Max. single transfer data volume | The maximum data volume returned at one time. |

| Http authorization | Enable HTTP authorization and enter username and password if the interface needs authentication. |

| Headers param | Header parameter of HTTP request. |

| Body param | Body parameter of HTTP request.

info When setting GET or DELETE as HTTP Method, only Form-data parameter is available. |

| Param type | Displays corresponding input parameters based on the selected mode.

|

| Output param config | Enable it to verify parameter of upstream interface. Click fields on the output param window as output results, and result values are automatically filled. When parsing the response data, comparison between result and response data starts and once difference occurs, connection fails. |

| Output type |

|

| Output result | Arrays or objects you selected from response result to be the ultimate output results. |

Object Model Data Source

| Parameter | Description |

|---|---|

| Local Data Source | Enable it to set data source to the current supOS platform. |

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Auto creation of metadata collection task | Enable it to automatically generate a metadata collection task in X-DAM. It is enabled and the collection is set to 1:00 by default. |

| supOS Address | Source supOS address and port. |

| supOS Tenant | Enter tenant name when the supOS is multi-tenant version. |

| supOS Auth | Use the generated AK/SK credential on the source supOS for data acquisition. |

File Data Source

- Local file directory

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Database file path | Enter the absolute path under /volumes/supfiles/ in local storage. If the path does not exist, the platform will create it automatically. tip The path is created under the dam-etl-xxxxx pod, and you can use the following commands to view files inside. |

- SMB

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Service address | IP address of the data source server. |

| Smb file path | The absolute path of the data source file to be connected. |

| Username/Password | The username and password to log in to the data source. |

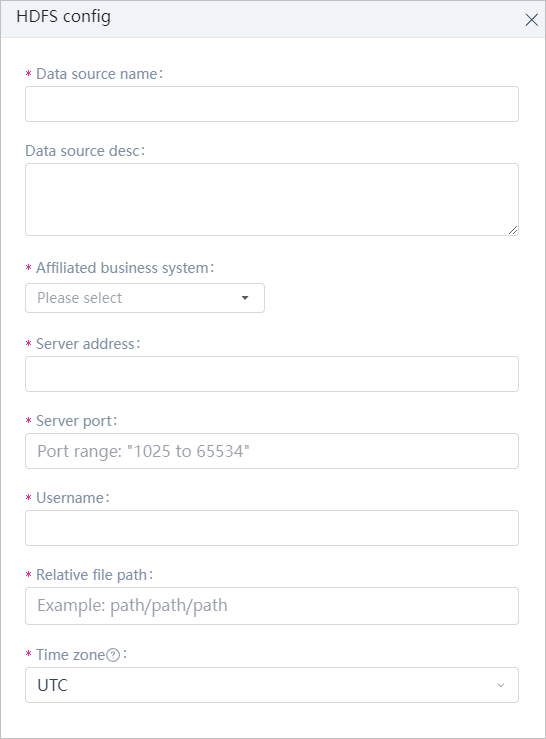

- HDFS

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Server address/port/Username | The IP and port of the Hadoop server and the username used for login. |

| Relative file path | Directory relative path on the Hadoop server. HDFS file generated during data transfer from Hive is stored under it. |

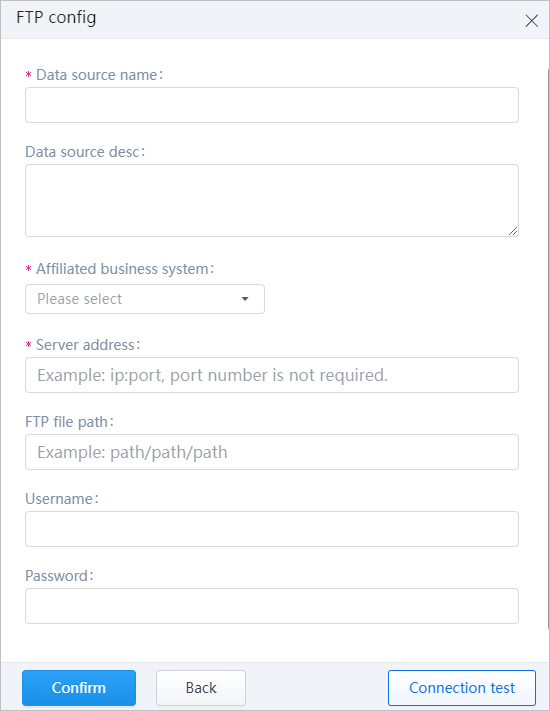

- FTP

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Server address | IP address of the FTP server. |

| Ftp file path | The absolute path of the data file to be connected. |

| Username/Password | The username and password used for login. |

- MINIO

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Service address | IP address of the MINIO server. |

| Bucket name | Name of the MINIO bucket to be connected. |

| File path | The absolute path of the data file to be connected. |

| Username/Password | The username and password used for login. |

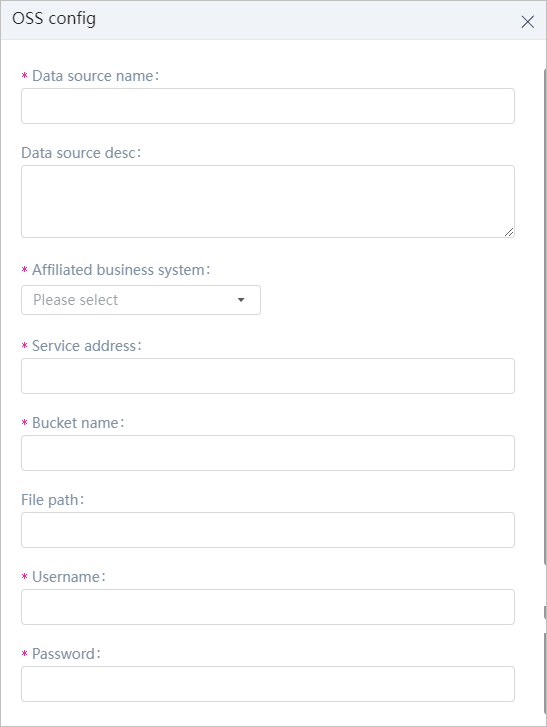

- OSS

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Service address | IP address of the OSS server. |

| Bucket name | Name of the OSS bucket to be connected. |

| File path | The absolute path of the data file to be connected. |

| Username/Password | The username and password used for login. |

Big Data Storage

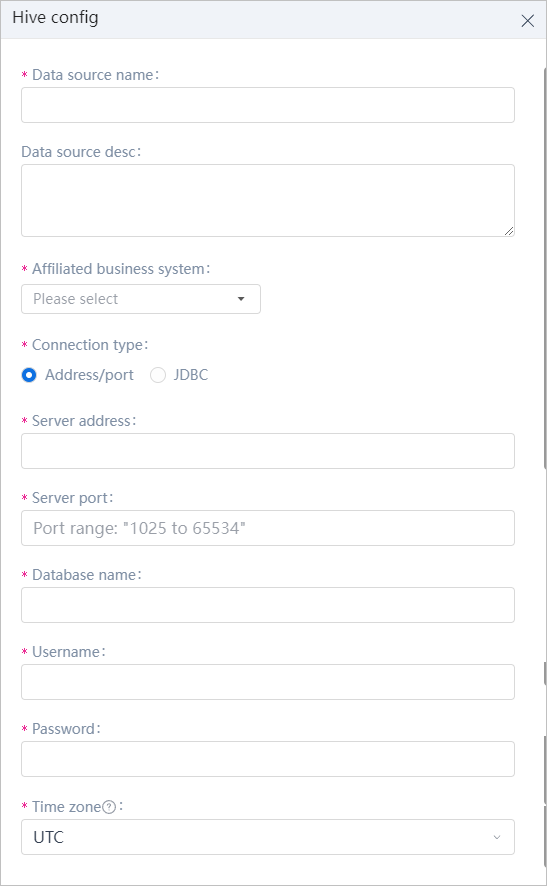

- Hive

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Connection type |

|

| Username/Password | The username and password used for database login. |

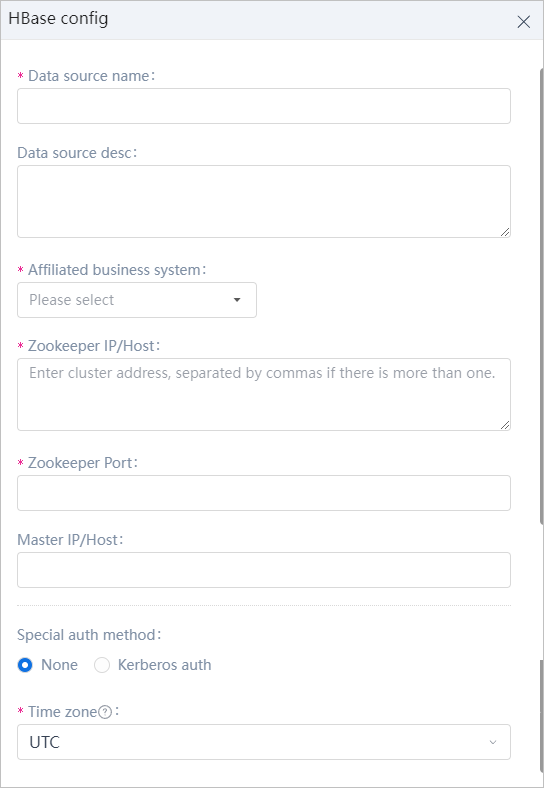

- HBase

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Zookeeper IP/Host/Port | IP address of Zookeeper. Use comma to separate IPs for cluster deployment. |

| Master IP/Host | Master IP or hostname of Zookeeper cluster. Leave it empty for independent deployment. |

| Special auth method | File path of Kerberos authentication is necessary when selecting Kerberos. |

info

When adding HBase as data source, the following configurations on the X-ETL backend are needed.

- Add HBase cluster deployment network configurations to dam-resource, flink-jobmanager-batch and flink-taskmanager-batch.

kubectl edit deploy dam-resource

- Press i to start editing, and add the following content between spec and containers.

hostAliases:

- ip: 192.168.x.x //hadoop cluster IP

hostnames:

- "ubuntu-hadoop01" //hadoop cluster hostname

- ip: 192.168.x.x

hostnames:

- "ubuntu-hadoop02"

- ip: 192.168.x.x

hostnames:

- "ubuntu-hadoop03"

- ip: 192.168.x.x

hostnames:

- "ubuntu-hadoop04"

- Finish editing and do the same for flink-jobmanager-batch and flink-taskmanager-batch.

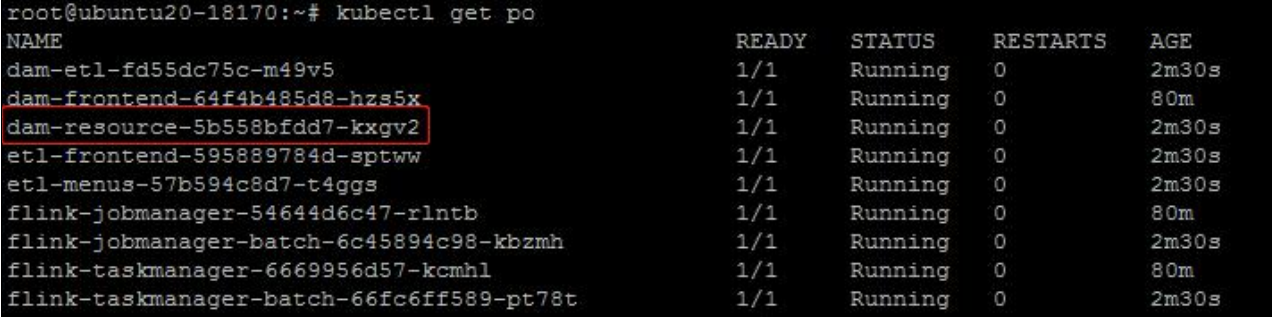

- Check the container ID, and then access the container.

kubectl get po

kubectl exec -it dam-resource-5b558bfdd7-kxgv2 bash

- Inside the container, execute the following content to check whether the mapping is added in hosts file.

cat /etc/hosts

- Exit the container.

Message Queue

- Kafka

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Server address | IP address and port of the Kafka server. |

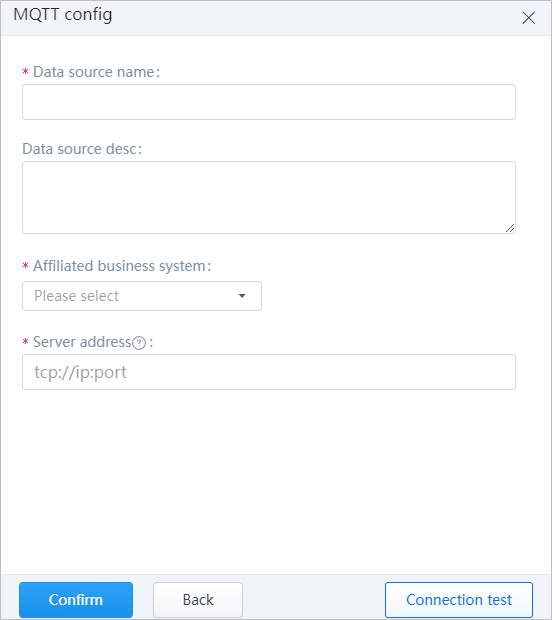

- MQTT

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| Server address | IP address and port of the MQTT server in the format of tcp://ip:port. |

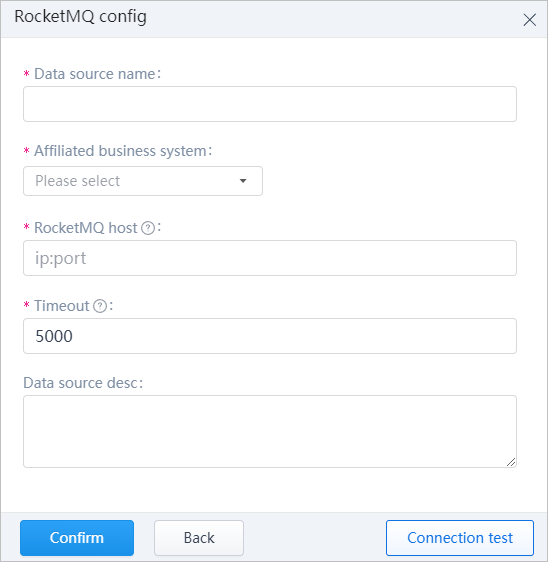

- RocketMQ

| Parameter | Description |

|---|---|

| Affiliated business system | Display category of data sources under Business system on Data Connection page. You can add custom systems as you need. |

| RacketMQ host | Host IP and port of the RocketMQ to be connected. |

| Timeout | Specified connection timeout duration. The default is 5,000 ms. |